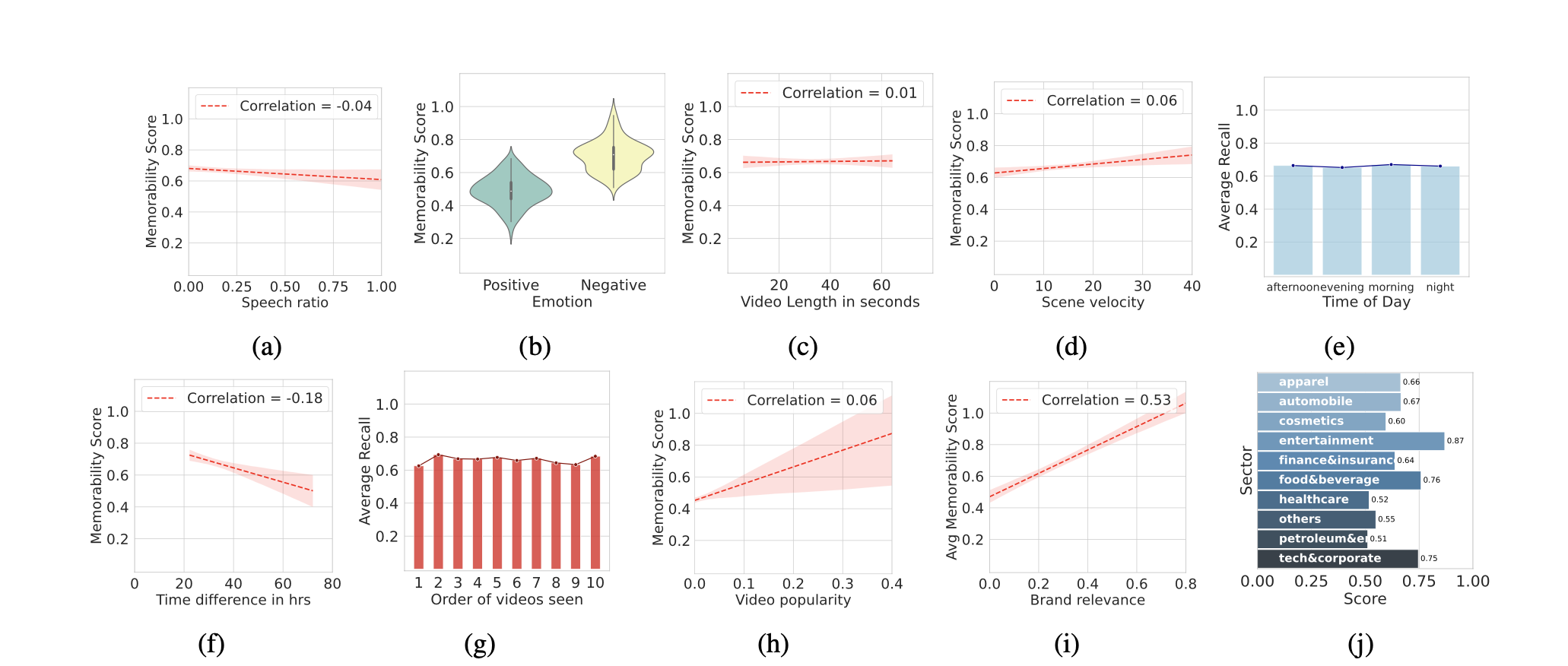

- Food, entertainment, and tech industries have the most memorable ads.

- The length of an ad has minimal impact on its memorability.

- Ads with rapid scene transitions are more memorable than slower-paced counterparts.

- High-level features like object and scene semantics play a crucial role in ad recall. Ads showcasing human images are significantly more memorable than those featuring objects.

- Ads evoking negative emotions tend to stay in memory longer than those with positive sentiments.

Long-Term Ad Memorability

Understanding and Generating Memorable Ads

Contact behavior-in-the-wild@googlegroups.com for questions and suggestions